Set up Google Ads to BigQuery export

Want to build a custom Google Ads dashboard in other (non-Google) BI tools or analyze/merge raw data with other sources using SQL?

In this short post, we will take a look at how you can set up Google Ads to BigQuery export without using any 3rd party connectors. If your BI tool doesn’t support direct integration with Google Ads, this might be one of the easiest (and cheapest) ways how to set up automatic Google Ads data export.

Prerequisites

Before we continue make sure you have:

Google Cloud account with BigQuery project

You can find how to create one using Google’s help center.

Alternatively, you can check Step 2 from this article with examples of how to set up a Google Cloud account. Usually, BigQuery API and BigQuery Data Transfer Service are enabled by default.

Access to Google Ads account

To connect data you will need to have access to a Google Account which you want to import data from.

(optional) Add an additional Google Ads email account to BigQuery

In case Google Ads access is on another email (not on account associated with Google Cloud) you will need to add it with appropriate permissions.

If Account is owned by you, you can just add BigQuery Admin permissions, or very specific roles required to set up data transfer:

bigquery.transfers.updatepermissions to create the transfer- Both

bigquery.datasets.getandbigquery.datasets.update

You can check this documentation if you want to use a more advanced approach for permission management.

Set up Data Transfer from Google Ads to BigQuery

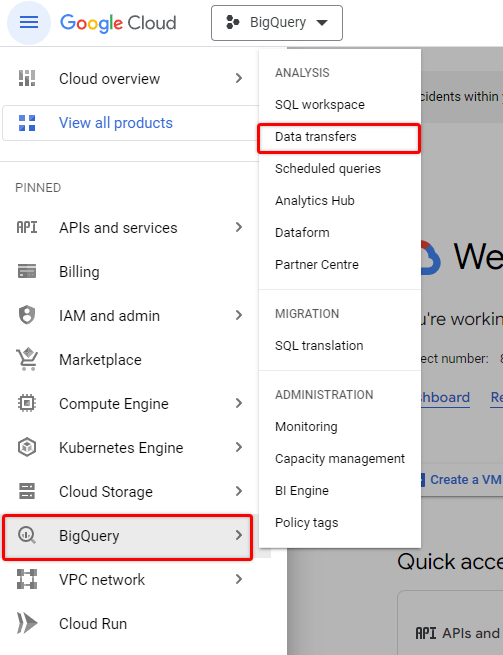

Open your Google Cloud project and from the menu go to BigQuery > Data Transfers.

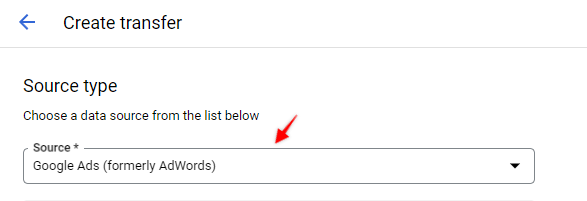

Click on “Create new Transfer” and from the source select “Google Ads”.

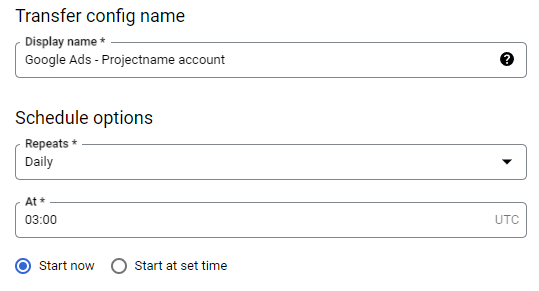

Provide descriptive data transfer name and select transfer schedule. Depending on how often you want this data to be loaded from Google Ads to BigQuery you can change this here.

If you want to export this daily you can select “Daily” and provide a time when it should run every day.

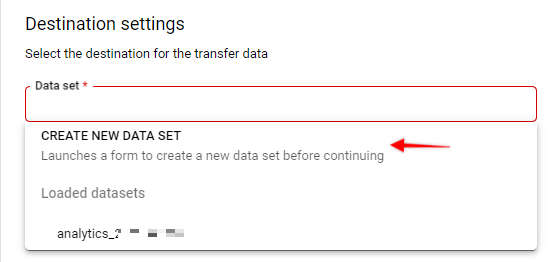

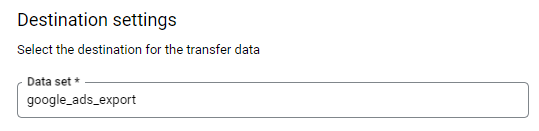

Then you need to provide a destination for data export. There will be multiple tables created by this export so it’s best to use a separate dataset.

Click on the Data set field and Create a new data set.

Provide details for new data set in the prompt shown by google and it should now appear in the input field.

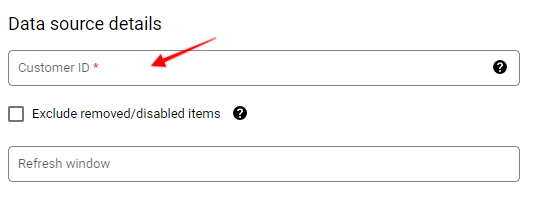

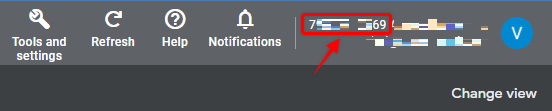

Next, you need to provide your Google Ads account ID.

You can usually see that ID on the top right corner. Copy that value and paste it into this field.

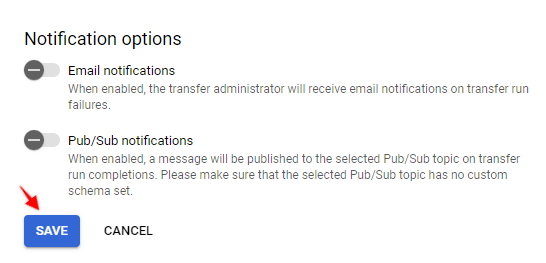

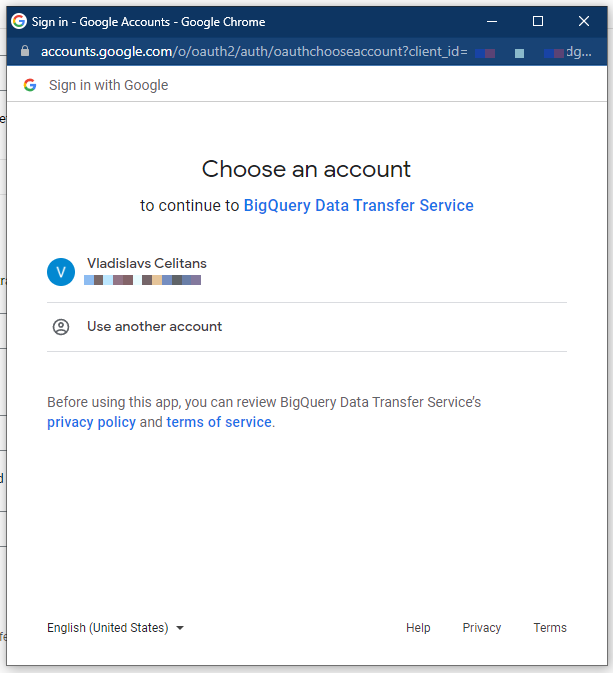

Click “Save” on the Transfer setup page and you will be asked to authorize to provided Ads ID.

You can authorize access from your existing account or use another one (just make sure that Google account is added with sufficient accesses – more on that below).

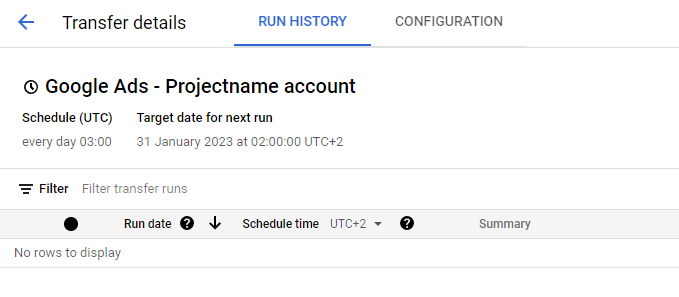

If everything goes well then the transfer should be successfully created and you should see details page:

There will be no data yet, and it will be loaded next time according to the schedule that you have just specified. Next, will take a look at how to get historical data from Google Ads to BigQuery.

Possible error messages

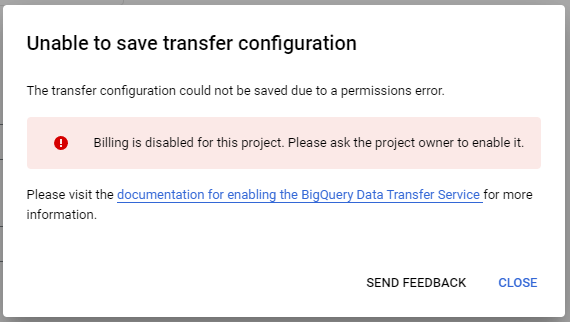

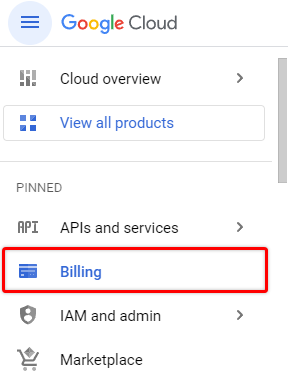

If you haven’t added billing details to Google Cloud, you might see a following error message after trying to save data transfer.

To fix that just visit the “Billing” section and make sure you have an active payment method added and a Billing account created. It doesn’t mean that you will be charged right away for your data export, in most cases, it will be enough with the free tier limits that BigQuery provides. But in case you do more complex queries or load a lot of data you might start seeing some costs in your bill.

Another error message that might be shown could be related to insufficient permissions. Make sure that email that has access to Google Ads has access to BigQuery as well. This was mentioned in the Point 3 in the prerequisites.

You need to have a BigQuery Admin role for that email or more specific roles mentioned in Google Cloud documentation.

Loading historical data from Google Ads into BigQuery

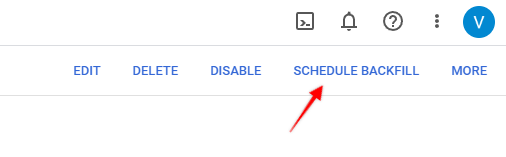

From your Transfer details page (that opened automatically after the transfer was created) find the “Schedule backfill” link in the top right corner.

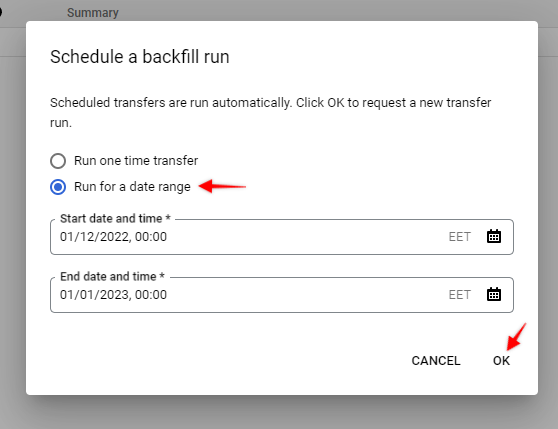

Then select “Run for a date range”, select time interval that you are interested in, and click OK.

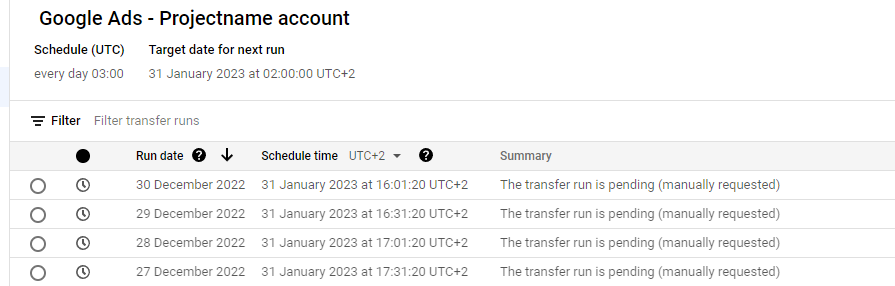

Once you refresh the project details page you should see all pending queries for each date and their current status. You can re-run any queries that failed for some reason and have an overview of what data is available. After backfill is scheduled it might take some time (multiple hours) before all requested data is loaded into BigQuery.

Once data for at least 1 day was exported, all tables will be available under your dataset name as usual.

As a next step, you can explore how you can query partitioned tables from this export and some sample queries provided by Google.